Fitting latent variables onto data - Exercises & Answers#

1. Grit revisited - are we sure its there?#

Armed with new knowledge of CFA, we can now examine directly whether the two-factor structure claimed in the research literature exists in the data we have to hand. While our use of EFA indirectly explored the latent variables in that dataset, with CFA we explicitly test the presence of two factors and set certain questions to load onto these variables.

First, lets re-obtain the data that has the grit questionnaire in it.

Download the data from this link: https://openpsychometrics.org/_rawdata/duckworth-grit-scale-data.zip

You will need to unzip it and grab the data.csv file.

Import everything we need first, adding semopy to the list of packages we will use.

Important#

After importing everything, run this command just underneath your imports. It’ll ensure the results will match when running this code elsehwere.

np.random.seed(36)

Show code cell source

# Your answer here

# Import what we need

import pandas as pd # dataframes

import seaborn as sns # plots

import statsmodels.formula.api as smf # Models

import marginaleffects as me # marginal effects

import numpy as np # numpy for some functions

import pingouin as pg

from factor_analyzer import FactorAnalyzer # Note we write from factor_analyzer

from horns import parallel_analysis

import semopy as sem # semopy imported here

np.random.seed(36)

Read in the data into a dataframe called grit, specifying the separator as ‘\t’ (sep=’\t’), as before.

Show code cell source

# Your answer here

# Read in

grit = pd.read_csv('data.csv', sep='\t')

grit.head(10)

| country | surveyelapse | GS1 | GS2 | GS3 | GS4 | GS5 | GS6 | GS7 | GS8 | ... | O7 | O8 | O9 | O10 | operatingsystem | browser | screenw | screenh | introelapse | testelapse | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | RO | 174 | 1 | 1 | 3 | 3 | 3 | 2 | 3 | 1 | ... | 5 | 4 | 5 | 4 | Windows | Chrome | 1366 | 768 | 69590 | 307 |

| 1 | US | 120 | 2 | 2 | 3 | 3 | 2 | 1 | 3 | 3 | ... | 4 | 3 | 4 | 5 | Macintosh | Chrome | 1280 | 800 | 33657 | 134 |

| 2 | US | 99 | 3 | 3 | 3 | 3 | 4 | 3 | 4 | 4 | ... | 5 | 5 | 4 | 4 | Windows | Firefox | 1920 | 1080 | 95550 | 138 |

| 3 | KE | 5098 | 1 | 3 | 4 | 2 | 4 | 1 | 5 | 4 | ... | 4 | 2 | 5 | 4 | Windows | Chrome | 1600 | 900 | 4 | 4440 |

| 4 | JP | 340 | 1 | 2 | 3 | 3 | 2 | 2 | 2 | 4 | ... | 4 | 1 | 3 | 2 | Windows | Firefox | 1920 | 1080 | 3 | 337 |

| 5 | AU | 515 | 1 | 2 | 5 | 1 | 3 | 1 | 4 | 5 | ... | 5 | 2 | 5 | 5 | Windows | Chrome | 1920 | 1080 | 2090 | 554 |

| 6 | US | 126 | 2 | 1 | 3 | 4 | 1 | 1 | 1 | 1 | ... | 5 | 5 | 5 | 5 | Windows | Chrome | 1366 | 768 | 36 | 212 |

| 7 | RO | 208 | 3 | 1 | 1 | 4 | 1 | 3 | 4 | 4 | ... | 5 | 3 | 4 | 3 | Windows | Chrome | 1366 | 768 | 6 | 207 |

| 8 | EU | 130 | 1 | 3 | 3 | 1 | 4 | 1 | 5 | 4 | ... | 5 | 1 | 4 | 5 | Windows | Microsoft Internet Explorer | 1600 | 1000 | 14 | 183 |

| 9 | NZ | 129 | 2 | 3 | 2 | 2 | 4 | 2 | 4 | 4 | ... | 4 | 3 | 4 | 4 | Macintosh | Chrome | 1440 | 900 | 68 | 143 |

10 rows × 98 columns

Like before, get the grit-related columns by keeping only the columns with ‘GS’ in them. Store in a dataframe called grit2.

Show code cell source

# Your answer here

grit2 = grit.filter(regex='GS\d+')

grit2.head()

| GS1 | GS2 | GS3 | GS4 | GS5 | GS6 | GS7 | GS8 | GS9 | GS10 | GS11 | GS12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 3 | 3 | 3 | 2 | 3 | 1 | 3 | 2 | 3 | 3 |

| 1 | 2 | 2 | 3 | 3 | 2 | 1 | 3 | 3 | 2 | 1 | 3 | 2 |

| 2 | 3 | 3 | 3 | 3 | 4 | 3 | 4 | 4 | 3 | 3 | 3 | 3 |

| 3 | 1 | 3 | 4 | 2 | 4 | 1 | 5 | 4 | 1 | 1 | 3 | 1 |

| 4 | 1 | 2 | 3 | 3 | 2 | 2 | 2 | 4 | 3 | 3 | 4 | 4 |

Building a latent factor model of grit#

We take the model directly stated from the original Duckworth et al (2007) paper that described the grit scale. The questions loading onto the two latent grit factors are as follows:

Consistency in interest

GS2

GS3

GS5

GS7

GS8

GS11

Perseverance in effort

GS1

GS4

GS6

GS9

GS10

GS12

With that in mind, create a CFA model that tests whether the two latent variables (consistency and perseverance) are measured by the above stated questionnaire variables. Create it and fit it in semopy.

Show code cell source

# Your answer here

# Model string

mdspec = """

consistency =~ GS2 + GS3 + GS5 + GS7 + GS8 + GS11

perseverance =~ GS1 + GS4 + GS6 + GS9 + GS10 + GS12

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(grit2)

SolverResult(fun=0.3782276478265812, success=True, n_it=24, x=array([ 1.122537 , 1.36719906, 1.39375519, 1.44706539, 0.80596871,

0.85883226, 1.32252661, 1.43824065, 1.57281968, 1.28650847,

0.68358768, 1.09217894, 0.98222584, 0.57969277, 0.8487556 ,

0.96281907, 1.19213773, 0.82104992, 0.57220757, 0.61039529,

0.78652695, 0.66075116, 0.45345085, -0.2184887 , 0.29510074]), message='Optimization terminated successfully', name_method='SLSQP', name_obj='MLW')

If you have fitted the model successfully, inspect the standardised loadings. Do they appear significant and sensible?

Show code cell source

# Your answer here

model.inspect(std_est=True)

| lval | op | rval | Estimate | Est. Std | Std. Err | z-value | p-value | |

|---|---|---|---|---|---|---|---|---|

| 0 | GS2 | ~ | consistency | 1.000000 | 0.590099 | - | - | - |

| 1 | GS3 | ~ | consistency | 1.122537 | 0.610272 | 0.035904 | 31.264817 | 0.0 |

| 2 | GS5 | ~ | consistency | 1.367199 | 0.712711 | 0.039375 | 34.72214 | 0.0 |

| 3 | GS7 | ~ | consistency | 1.393755 | 0.768558 | 0.038401 | 36.295186 | 0.0 |

| 4 | GS8 | ~ | consistency | 1.447065 | 0.739557 | 0.040748 | 35.512611 | 0.0 |

| 5 | GS11 | ~ | consistency | 0.805969 | 0.480314 | 0.030976 | 26.019401 | 0.0 |

| 6 | GS1 | ~ | perseverance | 1.000000 | 0.549115 | - | - | - |

| 7 | GS4 | ~ | perseverance | 0.858832 | 0.392929 | 0.041181 | 20.85489 | 0.0 |

| 8 | GS6 | ~ | perseverance | 1.322527 | 0.688657 | 0.042987 | 30.765874 | 0.0 |

| 9 | GS9 | ~ | perseverance | 1.438241 | 0.692969 | 0.046594 | 30.867441 | 0.0 |

| 10 | GS10 | ~ | perseverance | 1.572820 | 0.632947 | 0.053624 | 29.330766 | 0.0 |

| 11 | GS12 | ~ | perseverance | 1.286508 | 0.676221 | 0.042229 | 30.464843 | 0.0 |

| 12 | consistency | ~~ | consistency | 0.453451 | 1.000000 | 0.023116 | 19.616305 | 0.0 |

| 13 | consistency | ~~ | perseverance | -0.218489 | -0.597281 | 0.010538 | -20.732445 | 0.0 |

| 14 | perseverance | ~~ | perseverance | 0.295101 | 1.000000 | 0.016762 | 17.605834 | 0.0 |

| 15 | GS1 | ~~ | GS1 | 0.683588 | 0.698473 | 0.016434 | 41.597162 | 0.0 |

| 16 | GS10 | ~~ | GS10 | 1.092179 | 0.599378 | 0.027985 | 39.027627 | 0.0 |

| 17 | GS11 | ~~ | GS11 | 0.982226 | 0.769299 | 0.02249 | 43.672973 | 0.0 |

| 18 | GS12 | ~~ | GS12 | 0.579693 | 0.542725 | 0.015614 | 37.126657 | 0.0 |

| 19 | GS2 | ~~ | GS2 | 0.848756 | 0.651783 | 0.02037 | 41.667457 | 0.0 |

| 20 | GS3 | ~~ | GS3 | 0.962819 | 0.627568 | 0.023394 | 41.157212 | 0.0 |

| 21 | GS4 | ~~ | GS4 | 1.192138 | 0.845607 | 0.026929 | 44.269472 | 0.0 |

| 22 | GS5 | ~~ | GS5 | 0.821050 | 0.492043 | 0.021991 | 37.336067 | 0.0 |

| 23 | GS6 | ~~ | GS6 | 0.572208 | 0.525751 | 0.015686 | 36.478381 | 0.0 |

| 24 | GS7 | ~~ | GS7 | 0.610395 | 0.409318 | 0.018072 | 33.77512 | 0.0 |

| 25 | GS8 | ~~ | GS8 | 0.786527 | 0.453055 | 0.021966 | 35.806484 | 0.0 |

| 26 | GS9 | ~~ | GS9 | 0.660751 | 0.519794 | 0.018232 | 36.241337 | 0.0 |

Finally, check the fit statistics. Is this model any good? Does it describe the data well?

Show code cell source

# Your answer here

sem.calc_stats(model)

| DoF | DoF Baseline | chi2 | chi2 p-value | chi2 Baseline | CFI | GFI | AGFI | NFI | TLI | RMSEA | AIC | BIC | LogLik | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 53 | 66 | 1615.032056 | 0.0 | 15958.962524 | 0.901715 | 0.898801 | 0.873979 | 0.898801 | 0.877608 | 0.083089 | 49.243545 | 208.227772 | 0.378228 |

What do the guidelines suggest we should do with this model? Do the statistics suggest it fits the model well?

Show code cell source

# Your answer here

# Not fully. its close in many regards but only just squeaks across the line.

2. Grit and conscientiousness#

Let us now expand the use of CFA and dip into the idea of SEM a little. Here we’ll expand our previous exercise where we conducted an EFA on scores from the Big 5 trait Conscientiousness at the same time as the grit scale. In the following we’ll see what happens if we specify our grit model like we just did, but also include the data for Conscientiousness and its associated questions. As such, we’ll find three latent variables (the two grit related ones from the grit questionnaire, and one for Conscientiousness).

First, get the right questions out of the main grit dataframe and store it in a dataframe called grit_consc. It should include all questions with GS in them and C.

Show code cell source

# Your answer here

# Get grit and conscientiousness

grit_consc_names = ['GS1', 'GS2', 'GS3', 'GS4', 'GS5', 'GS6', 'GS7', 'GS8', 'GS9', 'GS10', 'GS11', 'GS12',

'C1', 'C2', 'C3', 'C4', 'C5', 'C6', 'C7', 'C8', 'C9', 'C10']

# Extract them

grit_consc = grit[grit_consc_names]

# Show

grit_consc.head()

| GS1 | GS2 | GS3 | GS4 | GS5 | GS6 | GS7 | GS8 | GS9 | GS10 | ... | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 3 | 3 | 3 | 2 | 3 | 1 | 3 | 2 | ... | 2 | 4 | 4 | 3 | 2 | 4 | 3 | 2 | 2 | 4 |

| 1 | 2 | 2 | 3 | 3 | 2 | 1 | 3 | 3 | 2 | 1 | ... | 4 | 3 | 4 | 3 | 1 | 3 | 5 | 2 | 5 | 3 |

| 2 | 3 | 3 | 3 | 3 | 4 | 3 | 4 | 4 | 3 | 3 | ... | 2 | 2 | 4 | 2 | 3 | 4 | 5 | 3 | 3 | 4 |

| 3 | 1 | 3 | 4 | 2 | 4 | 1 | 5 | 4 | 1 | 1 | ... | 4 | 1 | 5 | 1 | 4 | 1 | 4 | 1 | 4 | 3 |

| 4 | 1 | 2 | 3 | 3 | 2 | 2 | 2 | 4 | 3 | 3 | ... | 3 | 1 | 3 | 1 | 4 | 2 | 3 | 2 | 3 | 4 |

5 rows × 22 columns

Set up a CFA model in which the two grit latent variables are captured exactly like in the last exercise, and the conscientiousness variable is captured by its own questions.

Show code cell source

# Your answer here

# Model string

mdspec = """

consistency =~ GS2 + GS3 + GS5 + GS7 + GS8 + GS11

perseverance =~ GS1 + GS4 + GS6 + GS9 + GS10 + GS12

conscientious =~ C1 + C2 + C3 + C4 + C5 + C6 + C7 + C8 + C9 + C10

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(grit_consc)

SolverResult(fun=0.9710712086824149, success=True, n_it=48, x=array([ 1.09283328, 1.33418234, 1.36291197, 1.42914314, 0.78710305,

0.85078183, 1.39157688, 1.49479537, 1.55776988, 1.34527766,

-0.94991487, 0.48338527, -1.02911509, 1.07204046, -1.08695932,

0.76819775, -0.8109484 , 1.0327781 , 0.65677164, 0.75019843,

0.79143721, 1.38136428, 0.83958205, 1.02988488, 0.96542705,

1.30328443, 0.96080557, 0.90506302, 0.95856894, 0.70078748,

1.14495165, 0.98523927, 0.56304364, 0.83198401, 0.97233703,

1.20771199, 0.83141956, 0.54803132, 0.61782435, 0.77576666,

0.64744105, 0.56479771, 0.32254792, -0.29715799, 0.47034206,

-0.21648202, 0.27902947]), message='Optimization terminated successfully', name_method='SLSQP', name_obj='MLW')

Inspect the standardised loadings once the model has been estimated.

Show code cell source

# Your answer here

model.inspect(std_est=True)

| lval | op | rval | Estimate | Est. Std | Std. Err | z-value | p-value | |

|---|---|---|---|---|---|---|---|---|

| 0 | GS2 | ~ | consistency | 1.000000 | 0.600962 | - | - | - |

| 1 | GS3 | ~ | consistency | 1.092833 | 0.605118 | 0.034543 | 31.6372 | 0.0 |

| 2 | GS5 | ~ | consistency | 1.334182 | 0.708337 | 0.037735 | 35.35657 | 0.0 |

| 3 | GS7 | ~ | consistency | 1.362912 | 0.765356 | 0.03673 | 37.106282 | 0.0 |

| 4 | GS8 | ~ | consistency | 1.429143 | 0.743799 | 0.039179 | 36.476844 | 0.0 |

| 5 | GS11 | ~ | consistency | 0.787103 | 0.477756 | 0.029984 | 26.250568 | 0.0 |

| 6 | GS1 | ~ | perseverance | 1.000000 | 0.533645 | - | - | - |

| 7 | GS4 | ~ | perseverance | 0.850782 | 0.378515 | 0.041879 | 20.315416 | 0.0 |

| 8 | GS6 | ~ | perseverance | 1.391577 | 0.704603 | 0.044876 | 31.009702 | 0.0 |

| 9 | GS9 | ~ | perseverance | 1.494795 | 0.700406 | 0.048353 | 30.914318 | 0.0 |

| 10 | GS10 | ~ | perseverance | 1.557770 | 0.609603 | 0.054507 | 28.57938 | 0.0 |

| 11 | GS12 | ~ | perseverance | 1.345278 | 0.687617 | 0.04394 | 30.616507 | 0.0 |

| 12 | C1 | ~ | conscientious | 1.000000 | 0.655366 | - | - | - |

| 13 | C2 | ~ | conscientious | -0.949915 | -0.519141 | 0.032092 | -29.599427 | 0.0 |

| 14 | C3 | ~ | conscientious | 0.483385 | 0.368559 | 0.022368 | 21.61034 | 0.0 |

| 15 | C4 | ~ | conscientious | -1.029115 | -0.606145 | 0.030385 | -33.868641 | 0.0 |

| 16 | C5 | ~ | conscientious | 1.072040 | 0.634066 | 0.030477 | 35.175309 | 0.0 |

| 17 | C6 | ~ | conscientious | -1.086959 | -0.581919 | 0.033231 | -32.708767 | 0.0 |

| 18 | C7 | ~ | conscientious | 0.768198 | 0.507498 | 0.026483 | 29.007307 | 0.0 |

| 19 | C8 | ~ | conscientious | -0.810948 | -0.539424 | 0.026485 | -30.619698 | 0.0 |

| 20 | C9 | ~ | conscientious | 1.032778 | 0.621230 | 0.029867 | 34.578726 | 0.0 |

| 21 | C10 | ~ | conscientious | 0.656772 | 0.485152 | 0.023576 | 27.858042 | 0.0 |

| 22 | conscientious | ~~ | conscientious | 0.564798 | 1.000000 | 0.025115 | 22.488144 | 0.0 |

| 23 | conscientious | ~~ | consistency | 0.322548 | 0.625808 | 0.013958 | 23.108344 | 0.0 |

| 24 | conscientious | ~~ | perseverance | -0.297158 | -0.748542 | 0.012763 | -23.282097 | 0.0 |

| 25 | consistency | ~~ | consistency | 0.470342 | 1.000000 | 0.023376 | 20.121145 | 0.0 |

| 26 | consistency | ~~ | perseverance | -0.216482 | -0.597572 | 0.010434 | -20.746923 | 0.0 |

| 27 | perseverance | ~~ | perseverance | 0.279029 | 1.000000 | 0.016157 | 17.270103 | 0.0 |

| 28 | C1 | ~~ | C1 | 0.750198 | 0.570495 | 0.018656 | 40.211662 | 0.0 |

| 29 | C10 | ~~ | C10 | 0.791437 | 0.764628 | 0.018083 | 43.767014 | 0.0 |

| 30 | C2 | ~~ | C2 | 1.381364 | 0.730493 | 0.031916 | 43.280672 | 0.0 |

| 31 | C3 | ~~ | C3 | 0.839582 | 0.864165 | 0.018673 | 44.962816 | 0.0 |

| 32 | C4 | ~~ | C4 | 1.029885 | 0.632588 | 0.024763 | 41.589541 | 0.0 |

| 33 | C5 | ~~ | C5 | 0.965427 | 0.597961 | 0.02363 | 40.856799 | 0.0 |

| 34 | C6 | ~~ | C6 | 1.303284 | 0.661370 | 0.030928 | 42.139464 | 0.0 |

| 35 | C7 | ~~ | C7 | 0.960806 | 0.742446 | 0.02211 | 43.456143 | 0.0 |

| 36 | C8 | ~~ | C8 | 0.905063 | 0.709022 | 0.021072 | 42.950379 | 0.0 |

| 37 | C9 | ~~ | C9 | 0.958569 | 0.614074 | 0.023262 | 41.208169 | 0.0 |

| 38 | GS1 | ~~ | GS1 | 0.700787 | 0.715223 | 0.016475 | 42.536573 | 0.0 |

| 39 | GS10 | ~~ | GS10 | 1.144952 | 0.628384 | 0.02811 | 40.73177 | 0.0 |

| 40 | GS11 | ~~ | GS11 | 0.985239 | 0.771750 | 0.022495 | 43.798237 | 0.0 |

| 41 | GS12 | ~~ | GS12 | 0.563044 | 0.527183 | 0.014875 | 37.852765 | 0.0 |

| 42 | GS2 | ~~ | GS2 | 0.831984 | 0.638845 | 0.020011 | 41.576408 | 0.0 |

| 43 | GS3 | ~~ | GS3 | 0.972337 | 0.633833 | 0.023445 | 41.47376 | 0.0 |

| 44 | GS4 | ~~ | GS4 | 1.207712 | 0.856727 | 0.027035 | 44.672902 | 0.0 |

| 45 | GS5 | ~~ | GS5 | 0.831420 | 0.498259 | 0.021946 | 37.885028 | 0.0 |

| 46 | GS6 | ~~ | GS6 | 0.548031 | 0.503535 | 0.014807 | 37.011208 | 0.0 |

| 47 | GS7 | ~~ | GS7 | 0.617824 | 0.414231 | 0.017916 | 34.484275 | 0.0 |

| 48 | GS8 | ~~ | GS8 | 0.775767 | 0.446763 | 0.021583 | 35.942844 | 0.0 |

| 49 | GS9 | ~~ | GS9 | 0.647441 | 0.509432 | 0.017391 | 37.228265 | 0.0 |

Take a look at the model fit statistics now. What does this suggest to us?

Show code cell source

# Your answer here

sem.calc_stats(model)

| DoF | DoF Baseline | chi2 | chi2 p-value | chi2 Baseline | CFI | GFI | AGFI | NFI | TLI | RMSEA | AIC | BIC | LogLik | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 206 | 231 | 4146.474061 | 0.0 | 29702.88149 | 0.866297 | 0.860402 | 0.84346 | 0.860402 | 0.850071 | 0.066939 | 92.057858 | 390.948206 | 0.971071 |

What should we conclude? Do these three separate latent factors represent the data well?

Show code cell source

# Your answer here

# Not quite!

3. Latent regressions#

For the final trick, lets expand the last model we made but include an additional part - this time, we’ll use the two grit latent variables to predict the latent conscientiousness score. We’d like to see how well these latent variables can predict conscientiousness. If they are measuring the ‘same’ kind of trait, then we’d expect to see high coefficients for these.

Rebuild the model from question 2 but include an additional part where the two grit latent variables predict laten conscientiousness.

Show code cell source

# Your answer here

# Model string

mdspec = """

consistency =~ GS2 + GS3 + GS5 + GS7 + GS8 + GS11

perseverance =~ GS1 + GS4 + GS6 + GS9 + GS10 + GS12

conscientious =~ C1 + C2 + C3 + C4 + C5 + C6 + C7 + C8 + C9 + C10

conscientious ~ consistency + perseverance

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(grit_consc)

SolverResult(fun=0.9710712122615321, success=True, n_it=44, x=array([ 1.09372032, 1.33481513, 1.36326389, 1.42906892, 0.78786201,

0.8498987 , 1.3911391 , 1.4946746 , 1.55663121, 1.34467594,

-0.94951483, 0.48329874, -1.02884432, 1.07183657, -1.0865845 ,

0.768163 , -0.81085453, 1.03271978, 0.65668753, 0.30459112,

-0.82905879, 0.75009116, 0.79133413, 1.38197185, 0.83954118,

1.03002355, 0.96546841, 1.30376258, 0.96068456, 0.9050144 ,

0.95839995, 0.70040618, 1.14535444, 0.98499342, 0.56307038,

0.83191806, 0.9719378 , 1.20764937, 0.83116974, 0.54796473,

0.61777322, 0.7761055 , 0.64744345, 0.22025482, 0.46986339,

-0.21635048, 0.27907538]), message='Optimization terminated successfully', name_method='SLSQP', name_obj='MLW')

Once estimated, inspect the coefficients of that latent regression.

Show code cell source

# Your answer here

model.inspect(std_est=True)

| lval | op | rval | Estimate | Est. Std | Std. Err | z-value | p-value | |

|---|---|---|---|---|---|---|---|---|

| 0 | conscientious | ~ | consistency | 0.304591 | 0.277782 | 0.022774 | 13.37429 | 0.0 |

| 1 | conscientious | ~ | perseverance | -0.829059 | -0.582703 | 0.038286 | -21.654358 | 0.0 |

| 2 | GS2 | ~ | consistency | 1.000000 | 0.600782 | - | - | - |

| 3 | GS3 | ~ | consistency | 1.093720 | 0.605312 | 0.034572 | 31.636412 | 0.0 |

| 4 | GS5 | ~ | consistency | 1.334815 | 0.708377 | 0.037764 | 35.346361 | 0.0 |

| 5 | GS7 | ~ | consistency | 1.363264 | 0.765289 | 0.036755 | 37.09102 | 0.0 |

| 6 | GS8 | ~ | consistency | 1.429069 | 0.743540 | 0.039199 | 36.456336 | 0.0 |

| 7 | GS11 | ~ | consistency | 0.787862 | 0.477969 | 0.030008 | 26.255331 | 0.0 |

| 8 | GS1 | ~ | perseverance | 1.000000 | 0.533780 | - | - | - |

| 9 | GS4 | ~ | perseverance | 0.849899 | 0.378213 | 0.041859 | 20.303666 | 0.0 |

| 10 | GS6 | ~ | perseverance | 1.391139 | 0.704542 | 0.044855 | 31.014121 | 0.0 |

| 11 | GS9 | ~ | perseverance | 1.494675 | 0.700406 | 0.04834 | 30.920059 | 0.0 |

| 12 | GS10 | ~ | perseverance | 1.556631 | 0.609287 | 0.054476 | 28.574866 | 0.0 |

| 13 | GS12 | ~ | perseverance | 1.344676 | 0.687476 | 0.043917 | 30.618746 | 0.0 |

| 14 | C1 | ~ | conscientious | 1.000000 | 0.655438 | - | - | - |

| 15 | C2 | ~ | conscientious | -0.949515 | -0.518943 | 0.032088 | -29.591337 | 0.0 |

| 16 | C3 | ~ | conscientious | 0.483299 | 0.368548 | 0.022364 | 21.610512 | 0.0 |

| 17 | C4 | ~ | conscientious | -1.028844 | -0.606064 | 0.030378 | -33.867662 | 0.0 |

| 18 | C5 | ~ | conscientious | 1.071837 | 0.634031 | 0.03047 | 35.176885 | 0.0 |

| 19 | C6 | ~ | conscientious | -1.086584 | -0.581762 | 0.033225 | -32.703737 | 0.0 |

| 20 | C7 | ~ | conscientious | 0.768163 | 0.507550 | 0.026478 | 29.01177 | 0.0 |

| 21 | C8 | ~ | conscientious | -0.810855 | -0.539436 | 0.026479 | -30.622414 | 0.0 |

| 22 | C9 | ~ | conscientious | 1.032720 | 0.621287 | 0.029861 | 34.584457 | 0.0 |

| 23 | C10 | ~ | conscientious | 0.656688 | 0.485173 | 0.02357 | 27.860751 | 0.0 |

| 24 | conscientious | ~~ | conscientious | 0.220255 | 0.389878 | 0.011722 | 18.790372 | 0.0 |

| 25 | consistency | ~~ | consistency | 0.469863 | 1.000000 | 0.023361 | 20.113187 | 0.0 |

| 26 | consistency | ~~ | perseverance | -0.216350 | -0.597463 | 0.010429 | -20.744672 | 0.0 |

| 27 | perseverance | ~~ | perseverance | 0.279075 | 1.000000 | 0.016155 | 17.274642 | 0.0 |

| 28 | C1 | ~~ | C1 | 0.750091 | 0.570401 | 0.018655 | 40.209597 | 0.0 |

| 29 | C10 | ~~ | C10 | 0.791334 | 0.764607 | 0.018081 | 43.766842 | 0.0 |

| 30 | C2 | ~~ | C2 | 1.381972 | 0.730698 | 0.031928 | 43.283861 | 0.0 |

| 31 | C3 | ~~ | C3 | 0.839541 | 0.864173 | 0.018672 | 44.962958 | 0.0 |

| 32 | C4 | ~~ | C4 | 1.030024 | 0.632686 | 0.024765 | 41.591692 | 0.0 |

| 33 | C5 | ~~ | C5 | 0.965468 | 0.598005 | 0.02363 | 40.858009 | 0.0 |

| 34 | C6 | ~~ | C6 | 1.303763 | 0.661553 | 0.030937 | 42.142977 | 0.0 |

| 35 | C7 | ~~ | C7 | 0.960685 | 0.742393 | 0.022107 | 43.455503 | 0.0 |

| 36 | C8 | ~~ | C8 | 0.905014 | 0.709009 | 0.021071 | 42.950317 | 0.0 |

| 37 | C9 | ~~ | C9 | 0.958400 | 0.614002 | 0.023258 | 41.206853 | 0.0 |

| 38 | GS1 | ~~ | GS1 | 0.700406 | 0.715078 | 0.016468 | 42.532551 | 0.0 |

| 39 | GS10 | ~~ | GS10 | 1.145354 | 0.628769 | 0.028115 | 40.738819 | 0.0 |

| 40 | GS11 | ~~ | GS11 | 0.984993 | 0.771545 | 0.022491 | 43.79462 | 0.0 |

| 41 | GS12 | ~~ | GS12 | 0.563070 | 0.527377 | 0.014874 | 37.856134 | 0.0 |

| 42 | GS2 | ~~ | GS2 | 0.831918 | 0.639061 | 0.020008 | 41.579265 | 0.0 |

| 43 | GS3 | ~~ | GS3 | 0.971938 | 0.633597 | 0.023439 | 41.467314 | 0.0 |

| 44 | GS4 | ~~ | GS4 | 1.207649 | 0.856955 | 0.027032 | 44.675186 | 0.0 |

| 45 | GS5 | ~~ | GS5 | 0.831170 | 0.498202 | 0.021942 | 37.880345 | 0.0 |

| 46 | GS6 | ~~ | GS6 | 0.547965 | 0.503621 | 0.014806 | 37.010857 | 0.0 |

| 47 | GS7 | ~~ | GS7 | 0.617773 | 0.414332 | 0.017914 | 34.485515 | 0.0 |

| 48 | GS8 | ~~ | GS8 | 0.776105 | 0.447148 | 0.021585 | 35.955693 | 0.0 |

| 49 | GS9 | ~~ | GS9 | 0.647443 | 0.509432 | 0.017393 | 37.224808 | 0.0 |

By how much does an increase in latent grit alter conscientiousness?

4. Testing the presence of the Big Five - in Big Data#

We’ll now test the presence of the Big Five in a massive dataset of over 1 million respondents! You can find the dataset here: https://openpsychometrics.org/_rawdata/IPIP-FFM-data-8Nov2018.zip

Download it, unzip it, and read in the data-final.csv file, which is an enormous dataset containing responses to a Big 5 Questionnaire (the IPIP). These are the questions, the short-hand prefixes showing what trait the question is measuring:

EXT1 - I am the life of the party.

EXT2 - I don’t talk a lot.

EXT3 - I feel comfortable around people.

EXT4 - I keep in the background.

EXT5 - I start conversations.

EXT6 - I have little to say.

EXT7 - I talk to a lot of different people at parties.

EXT8 - I don’t like to draw attention to myself.

EXT9 - I don’t mind being the center of attention.

EXT10 - I am quiet around strangers.

EST1 - I get stressed out easily.

EST2 - I am relaxed most of the time.

EST3 - I worry about things.

EST4 - I seldom feel blue.

EST5 - I am easily disturbed.

EST6 - I get upset easily.

EST7 - I change my mood a lot.

EST8 - I have frequent mood swings.

EST9 - I get irritated easily.

EST10 - I often feel blue.

AGR1 - I feel little concern for others.

AGR2 - I am interested in people.

AGR3 - I insult people.

AGR4 - I sympathize with others’ feelings.

AGR5 - I am not interested in other people’s problems.

AGR6 - I have a soft heart.

AGR7 - I am not really interested in others.

AGR8 - I take time out for others.

AGR9 - I feel others’ emotions.

AGR10 - I make people feel at ease.

CSN1 - I am always prepared.

CSN2 - I leave my belongings around.

CSN3 - I pay attention to details.

CSN4 - I make a mess of things.

CSN5 - I get chores done right away.

CSN6 - I often forget to put things back in their proper place.

CSN7 - I like order.

CSN8 - I shirk my duties.

CSN9 - I follow a schedule.

CSN10 - I am exacting in my work.

OPN1 - I have a rich vocabulary.

OPN2 - I have difficulty understanding abstract ideas.

OPN3 - I have a vivid imagination.

OPN4 - I am not interested in abstract ideas.

OPN5 - I have excellent ideas.

OPN6 - I do not have a good imagination.

OPN7 - I am quick to understand things.

OPN8 - I use difficult words.

OPN9 - I spend time reflecting on things.

OPN10 - I am full of ideas.

Show code cell source

# Your answer here

big5 = pd.read_csv('data-final.csv', sep='\t')

big5.head()

| EXT1 | EXT2 | EXT3 | EXT4 | EXT5 | EXT6 | EXT7 | EXT8 | EXT9 | EXT10 | ... | dateload | screenw | screenh | introelapse | testelapse | endelapse | IPC | country | lat_appx_lots_of_err | long_appx_lots_of_err | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.0 | 1.0 | 5.0 | 2.0 | 5.0 | 1.0 | 5.0 | 2.0 | 4.0 | 1.0 | ... | 2016-03-03 02:01:01 | 768.0 | 1024.0 | 9.0 | 234.0 | 6 | 1 | GB | 51.5448 | 0.1991 |

| 1 | 3.0 | 5.0 | 3.0 | 4.0 | 3.0 | 3.0 | 2.0 | 5.0 | 1.0 | 5.0 | ... | 2016-03-03 02:01:20 | 1360.0 | 768.0 | 12.0 | 179.0 | 11 | 1 | MY | 3.1698 | 101.706 |

| 2 | 2.0 | 3.0 | 4.0 | 4.0 | 3.0 | 2.0 | 1.0 | 3.0 | 2.0 | 5.0 | ... | 2016-03-03 02:01:56 | 1366.0 | 768.0 | 3.0 | 186.0 | 7 | 1 | GB | 54.9119 | -1.3833 |

| 3 | 2.0 | 2.0 | 2.0 | 3.0 | 4.0 | 2.0 | 2.0 | 4.0 | 1.0 | 4.0 | ... | 2016-03-03 02:02:02 | 1920.0 | 1200.0 | 186.0 | 219.0 | 7 | 1 | GB | 51.75 | -1.25 |

| 4 | 3.0 | 3.0 | 3.0 | 3.0 | 5.0 | 3.0 | 3.0 | 5.0 | 3.0 | 4.0 | ... | 2016-03-03 02:02:57 | 1366.0 | 768.0 | 8.0 | 315.0 | 17 | 2 | KE | 1.0 | 38.0 |

5 rows × 110 columns

There are some other columns which can be gotten rid of by running the following code:

Show code cell source

# Run this to keep only needed columns

big5 = big5.filter(regex='[A-Z]\d+').loc[:, lambda x: ~x.columns.str.contains('_E')]

big5.head()

| EXT1 | EXT2 | EXT3 | EXT4 | EXT5 | EXT6 | EXT7 | EXT8 | EXT9 | EXT10 | ... | OPN1 | OPN2 | OPN3 | OPN4 | OPN5 | OPN6 | OPN7 | OPN8 | OPN9 | OPN10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.0 | 1.0 | 5.0 | 2.0 | 5.0 | 1.0 | 5.0 | 2.0 | 4.0 | 1.0 | ... | 5.0 | 1.0 | 4.0 | 1.0 | 4.0 | 1.0 | 5.0 | 3.0 | 4.0 | 5.0 |

| 1 | 3.0 | 5.0 | 3.0 | 4.0 | 3.0 | 3.0 | 2.0 | 5.0 | 1.0 | 5.0 | ... | 1.0 | 2.0 | 4.0 | 2.0 | 3.0 | 1.0 | 4.0 | 2.0 | 5.0 | 3.0 |

| 2 | 2.0 | 3.0 | 4.0 | 4.0 | 3.0 | 2.0 | 1.0 | 3.0 | 2.0 | 5.0 | ... | 5.0 | 1.0 | 2.0 | 1.0 | 4.0 | 2.0 | 5.0 | 3.0 | 4.0 | 4.0 |

| 3 | 2.0 | 2.0 | 2.0 | 3.0 | 4.0 | 2.0 | 2.0 | 4.0 | 1.0 | 4.0 | ... | 4.0 | 2.0 | 5.0 | 2.0 | 3.0 | 1.0 | 4.0 | 4.0 | 3.0 | 3.0 |

| 4 | 3.0 | 3.0 | 3.0 | 3.0 | 5.0 | 3.0 | 3.0 | 5.0 | 3.0 | 4.0 | ... | 5.0 | 1.0 | 5.0 | 1.0 | 5.0 | 1.0 | 5.0 | 3.0 | 5.0 | 5.0 |

5 rows × 50 columns

With the dataset ready, prepare a CFA model that tests whether each of the ten questions load onto their respective latent factor (e.g. EXT1, EXT2 on Extraversion, OPN1, OPN10 onto Openness, and so on).

Show code cell source

# Your answer here

# Model string

mdspec = """

extra =~ EXT1 + EXT2 + EXT3 + EXT4 + EXT5 + EXT6 + EXT7 + EXT8 + EXT9 + EXT10

open =~ OPN1 + OPN2 + OPN3 + OPN4 + OPN5 + OPN6 + OPN7 + OPN8 + OPN9 + OPN10

consc =~ CSN1 + CSN2 + CSN3 + CSN4 + CSN5 + CSN6 + CSN7 + CSN8 + CSN9 + CSN10

agree =~ AGR1 + AGR2 + AGR3 + AGR4 + AGR5 + AGR6 + AGR7 + AGR8 + AGR9 + AGR10

neuro =~ EST1 + EST2 + EST3 + EST4 + EST5 + EST6 + EST7 + EST8 + EST9 + EST10

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(big5) # EDIT THIS

SolverResult(fun=5.36296039998346, success=True, n_it=57, x=array([-1.06200145, 0.95417843, -1.030203 , 1.08329996, -0.81910411,

1.18386593, -0.80722522, 0.94436833, -1.02606913, -0.86188908,

0.96142689, -0.74789625, 1.01326436, -0.82631873, 0.78080518,

1.01344284, 0.62361959, 1.18156628, -1.09149167, 0.5441523 ,

-1.07460931, 1.11229093, -1.2430879 , 0.77959885, -0.80312943,

1.03367876, 0.60953939, -1.12362769, 0.68604513, -1.43895163,

1.20265913, -1.16395247, 1.14358636, -1.07432794, -1.40957848,

-0.85553933, -0.65361763, 0.74696009, -0.480897 , 0.72871028,

1.06146634, 1.0741923 , 1.14944716, 0.97562448, 0.94016725,

1.43674748, 0.90548163, 0.84803146, 1.4625594 , 0.52875896,

0.85281192, 1.00831611, 0.78594071, 0.78610704, 0.64851546,

0.87507333, 0.91986045, 1.32519691, 0.94056273, 0.9785403 ,

0.99411219, 1.21806196, 0.96280453, 0.97306684, 1.08424766,

0.9874805 , 1.02212687, 1.1566158 , 0.89358005, 1.37933939,

1.18518101, 0.83121266, 0.72093211, 0.71466859, 0.89804327,

0.86073212, 0.92737012, 0.92128132, 0.8048323 , 0.74866263,

0.76710141, 1.00770347, 0.92762256, 1.13732951, 1.1543183 ,

0.93733664, 0.50929538, 0.93945862, 0.8324595 , 0.96393237,

0.57244287, 0.94478514, 0.77220248, 1.16343713, 0.92790156,

0.35866303, 0.50955039, -0.06273015, 0.73729111, -0.17700163,

0.05657929, -0.1776681 , 0.82428062, -0.01288336, -0.18473819,

0.40169197, -0.06727421, 0.04077642, 0.11323818, -0.03575431]), message='Optimization terminated successfully', name_method='SLSQP', name_obj='MLW')

Check the model fit statistics:

Show code cell source

# Your answer here

sem.calc_stats(model)

| DoF | DoF Baseline | chi2 | chi2 p-value | chi2 Baseline | CFI | GFI | AGFI | NFI | TLI | RMSEA | AIC | BIC | LogLik | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 1165 | 1225 | 5.445234e+06 | 0.0 | 1.902557e+07 | 0.713837 | 0.713794 | 0.699054 | 0.713794 | 0.699099 | 0.067841 | 209.274079 | 1510.654937 | 5.36296 |

Based on this truly massive dataset, what do we make of the Big 5 model?

5. Exploring and confirming#

For the final exercise, we’ll see how EFA and CFA work together.

The last dataset we’ll see contains a series of scales that researchers thought might measure the ‘DISC’ personality model (see here), which has four central traits:

Dominance: active use of force to overcome resistance in the environment

Inducement: use of charm in order to deal with obstacles

Submission: warm and voluntary acceptance of the need to fulfill a request

Compliance: fearful adjustment to a superior force.

This model of personality is used in business, but has no real empirical underpinning. To try to address this, researchers took four sub-scale measures from the IPIP, which measure similar-sorts of things - namely, assertiveness, social confidence, adventurousness, and dominance.

Our goal now will be to explore a set of latent factors in half of this data, and then confirm it in the other half. Thus, we will use both EFA and CFA.

First, lets read in the data, which is accessible from here: http://openpsychometrics.org/_rawdata/AS+SC+AD+DO.zip

Extract the data.csv file and read it in, and show the head. I’ve renamed it data-disc.csv my end and read it in with that title.

Show code cell source

# Your answer here

disc = pd.read_csv('data-disc.csv')

disc.head()

| AS1 | AS2 | AS3 | AS4 | AS5 | AS6 | AS7 | AS8 | AS9 | AS10 | ... | DO3 | DO4 | DO5 | DO6 | DO7 | DO8 | DO9 | DO10 | age | gender | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4 | 4 | 3 | 3 | 5 | 4 | 1 | 3 | 1 | 1 | ... | 3 | 1 | 3 | 2 | 5 | 4 | 2 | 1 | 29 | 2 |

| 1 | 4 | 3 | 4 | 4 | 3 | 2 | 3 | 3 | 4 | 3 | ... | 3 | 2 | 3 | 2 | 3 | 3 | 2 | 2 | 49 | 2 |

| 2 | 5 | 4 | 4 | 5 | 3 | 3 | 2 | 2 | 1 | 1 | ... | 3 | 3 | 3 | 4 | 4 | 5 | 2 | 3 | 52 | 1 |

| 3 | 4 | 3 | 3 | 2 | 3 | 3 | 4 | 3 | 4 | 1 | ... | 3 | 3 | 4 | 4 | 4 | 5 | 3 | 1 | 34 | 2 |

| 4 | 4 | 4 | 4 | 4 | 4 | 3 | 2 | 1 | 2 | 0 | ... | 4 | 3 | 4 | 3 | 5 | 5 | 4 | 4 | 52 | 2 |

5 rows × 42 columns

The column names here represent the four sub-scales, like so:

Assertiveness

AS1 Express myself easily.

AS2 Try to lead others.

AS3 Automatically take charge.

AS4 Know how to convince others.

AS5 Am the first to act.

AS6 Take control of things.

AS7 Wait for others to lead the way.

AS8 Let others make the decisions.

AS9 Am not highly motivated to succeed.

AS10 Can’t come up with new ideas.

Social Confidence

SC1 Feel comfortable around people.

SC2 Don’t mind being the center of attention.

SC3 Am good at making impromptu speeches.

SC4 Express myself easily.

SC5 Have a natural talent for influencing people.

SC6 Hate being the center of attention.

SC7 Lack the talent for influencing people.

SC8 Often feel uncomfortable around others.

SC9 Don’t like to draw attention to myself.

SC10 Have little to say.

Adventurousness

AD1 Prefer variety to routine.

AD2 Like to visit new places.

AD3 Interested in many things.

AD4 Like to begin new things.

AD5 Prefer to stick with things that I know.

AD6 Dislike changes.

AD7 Don’t like the idea of change.

AD8 Am a creature of habit.

AD9 Dislike new foods.

AD10 Am attached to conventional ways.

Dominance

DO1 Try to surpass others’ accomplishments.

DO2 Try to outdo others.

DO3 Am quick to correct others.

DO4 Impose my will on others.

DO5 Demand explanations from others.

DO6 Want to control the conversation.

DO7 Am not afraid of providing criticism.

DO8 Challenge others’ points of view.

DO9 Lay down the law to others.

DO10 Put people under pressure.

The first task is to prepare the data. Drop the age and gender columns, and the use the .sample dataframe method to extract 50% of the data for exploration and the other half for confirmation. This can be a tricky step, so take care.

Show code cell source

# Your answer here

# Drop columns

disc = disc.drop(columns=['age', 'gender'])

# Sample half

explore = disc.sample(frac=.50, random_state=42)

# Get the other half not in the first half

confirm = disc[~disc.index.isin(explore.index)]

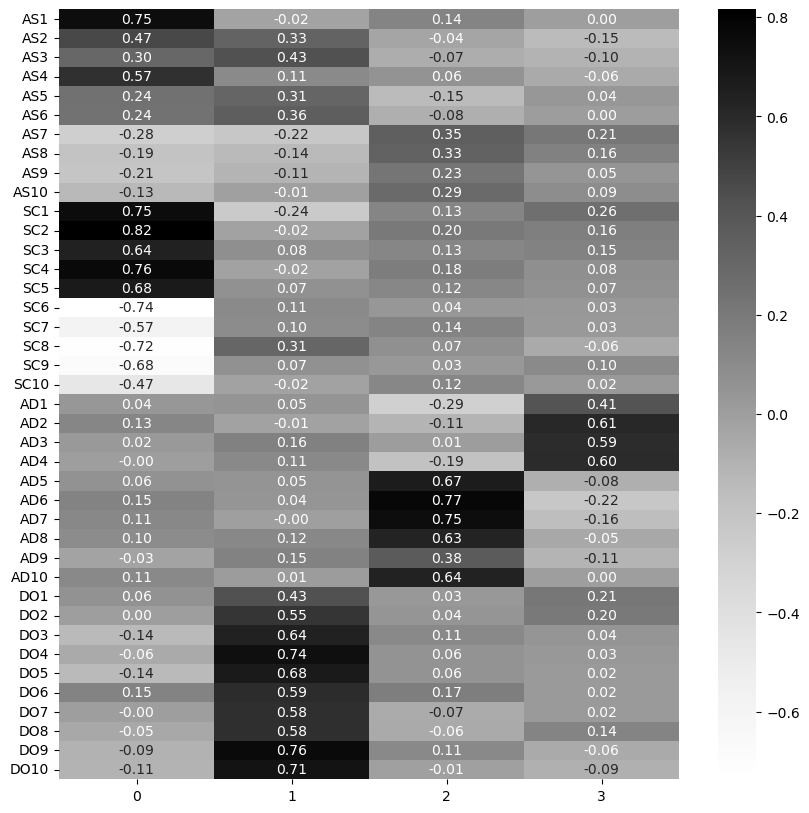

Fit an EFA to the first half of the data. Lets start with four factors, which would represent the four subscales. What does that solution look like? Fit it and examine a plot of the loadings.

If you want the figure to be larger, you can run the following lines of code before you make a heatmap:

import matplotlib.pyplot as plt plt.figure(figsize=(10, 10))

Show code cell source

# Your answer here

# EFA

efa = FactorAnalyzer(n_factors=4).fit(explore)

# Get loadings

loadings = pd.DataFrame(efa.loadings_, index=explore.columns)

# Plot

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 10))

sns.heatmap(loadings, annot=True, cmap='Grays', fmt='.2f')

<Axes: >

We can see immediately that aspects of the Assertiveness and Social Confidence scales align with the first factor, dominance with the second, adventurousness with the third, while some questions in adventurousness (e.g. 1-4) seem to align with the fourth factor. Examine the variance explained next.

Show code cell source

# Your answer here

efa.get_factor_variance()

(array([6.4104039 , 4.89054046, 3.33641926, 1.72314879]),

array([0.1602601 , 0.12226351, 0.08341048, 0.04307872]),

array([0.1602601 , 0.28252361, 0.36593409, 0.40901281]))

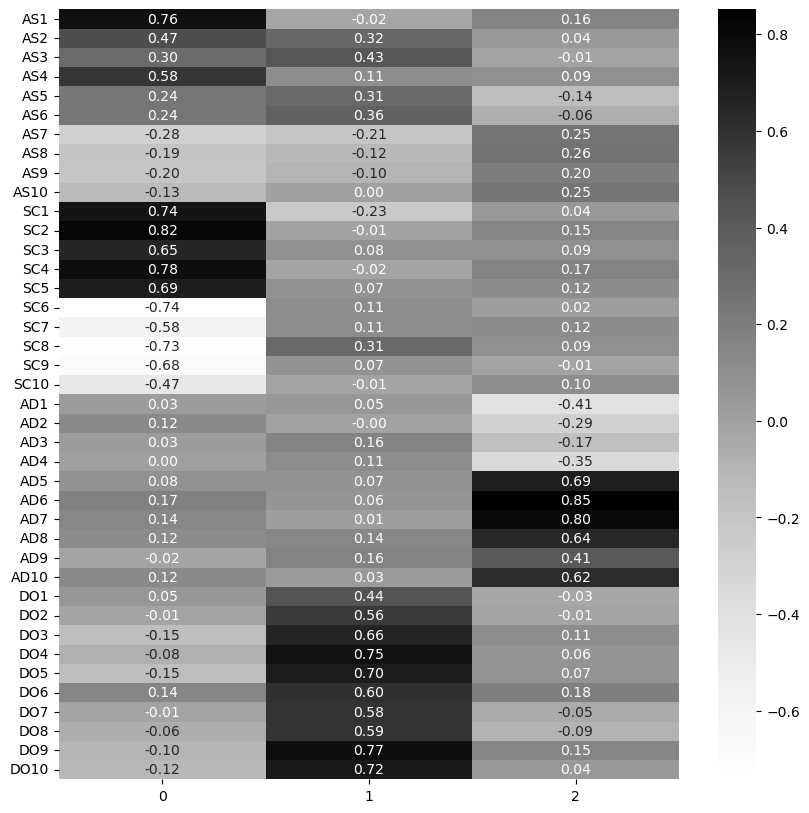

This explains around 40%, with the most common from the first two factors. Perhaps we could do away with a 4 factor solution and retain a 3 factor one? Fit that below.

Show code cell source

# Your answer here

# EFA

efa = FactorAnalyzer(n_factors=3).fit(explore)

# Get loadings

loadings = pd.DataFrame(efa.loadings_, index=explore.columns)

# Plot

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 10))

sns.heatmap(loadings, annot=True, cmap='Grays', fmt='.2f')

<Axes: >

Examine the variance of this fit.

Show code cell source

# Your answer here

efa.get_factor_variance()

(array([6.54110144, 5.0344487 , 3.69496587]),

array([0.16352754, 0.12586122, 0.09237415]),

array([0.16352754, 0.28938875, 0.3817629 ]))

This loses only around 2% variance and has some more informative factors. We’ll then say there are three factors underpinning this four-questionnaire test. The first captures Assertiveness and Social Confidence, the second dominance, and the third adventurousness.

Now, how might we translate this setup into a CFA model that we can test on the other half of the data?

One straightforward way would be to use a coarse measure as just described above - that is, all questions for assertiveness and social confidence go on one factor, all dominance questions on another, and all adventurousness questions on the final one.

Build that below, but using the other slice of the data to confirm whether this structure ‘holds’. Check the fit statistics.

Show code cell source

# Your answer here

# Model string

mdspec = """

latent1 =~ AS1 + AS2 + AS3 + AS4 + AS5 + AS6 + AS7 + AS8 + AS9 + AS10 + SC1 + SC2 + SC3 + SC4 + SC5 + SC6 + SC7 + SC8 + SC9 + SC10

latent2 =~ DO1 + DO2 + DO3 + DO4 + DO5 + DO6 + DO7 + DO8 + DO9 + DO10

latent3 =~ AD1 + AD2 + AD3 + AD4 + AD5 + AD6 + AD7 + AD8 + AD9 + AD10

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(confirm)

# Fit statistics

sem.calc_stats(model)

| DoF | DoF Baseline | chi2 | chi2 p-value | chi2 Baseline | CFI | GFI | AGFI | NFI | TLI | RMSEA | AIC | BIC | LogLik | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 737 | 780 | 4041.373173 | 0.0 | 9776.734335 | 0.632714 | 0.586634 | 0.562516 | 0.586634 | 0.611285 | 0.094506 | 149.930922 | 500.239906 | 8.034539 |

That does not look great! Inspect whether the individual coefficients are associated in the way we’d expect:

Show code cell source

# Your answer here

model.inspect(std_est=True).query('op == "~"')

| lval | op | rval | Estimate | Est. Std | Std. Err | z-value | p-value | |

|---|---|---|---|---|---|---|---|---|

| 0 | AS1 | ~ | latent1 | 1.000000 | 0.704852 | - | - | - |

| 1 | AS2 | ~ | latent1 | 0.818380 | 0.609185 | 0.063324 | 12.923657 | 0.0 |

| 2 | AS3 | ~ | latent1 | 0.826251 | 0.596697 | 0.065244 | 12.664005 | 0.0 |

| 3 | AS4 | ~ | latent1 | 0.803585 | 0.645872 | 0.058724 | 13.68413 | 0.0 |

| 4 | AS5 | ~ | latent1 | 0.668898 | 0.496057 | 0.063347 | 10.559247 | 0.0 |

| 5 | AS6 | ~ | latent1 | 0.621050 | 0.471490 | 0.061842 | 10.042551 | 0.0 |

| 6 | AS7 | ~ | latent1 | -0.679325 | -0.518800 | 0.061552 | -11.036618 | 0.0 |

| 7 | AS8 | ~ | latent1 | -0.452216 | -0.344830 | 0.061406 | -7.364376 | 0.0 |

| 8 | AS9 | ~ | latent1 | -0.400243 | -0.269313 | 0.069506 | -5.758391 | 0.0 |

| 9 | AS10 | ~ | latent1 | -0.311342 | -0.229752 | 0.063346 | -4.914959 | 0.000001 |

| 10 | SC1 | ~ | latent1 | 0.894873 | 0.589880 | 0.071463 | 12.522108 | 0.0 |

| 11 | SC2 | ~ | latent1 | 1.086737 | 0.657465 | 0.07805 | 13.923668 | 0.0 |

| 12 | SC3 | ~ | latent1 | 1.029209 | 0.600678 | 0.080742 | 12.746809 | 0.0 |

| 13 | SC4 | ~ | latent1 | 0.957403 | 0.651346 | 0.069391 | 13.797292 | 0.0 |

| 14 | SC5 | ~ | latent1 | 0.890399 | 0.631887 | 0.066474 | 13.394672 | 0.0 |

| 15 | SC6 | ~ | latent1 | -0.957331 | -0.614929 | 0.073398 | -13.042958 | 0.0 |

| 16 | SC7 | ~ | latent1 | -0.773334 | -0.599738 | 0.060762 | -12.727266 | 0.0 |

| 17 | SC8 | ~ | latent1 | -0.874095 | -0.579628 | 0.071015 | -12.30852 | 0.0 |

| 18 | SC9 | ~ | latent1 | -0.910533 | -0.601252 | 0.071365 | -12.758753 | 0.0 |

| 19 | SC10 | ~ | latent1 | -0.769383 | -0.533247 | 0.067851 | -11.33938 | 0.0 |

| 20 | DO1 | ~ | latent2 | 1.000000 | 0.538283 | - | - | - |

| 21 | DO2 | ~ | latent2 | 1.142825 | 0.592763 | 0.113915 | 10.032241 | 0.0 |

| 22 | DO3 | ~ | latent2 | 1.144616 | 0.666074 | 0.106152 | 10.782812 | 0.0 |

| 23 | DO4 | ~ | latent2 | 1.285773 | 0.744064 | 0.112106 | 11.46927 | 0.0 |

| 24 | DO5 | ~ | latent2 | 1.282494 | 0.688627 | 0.116664 | 10.99304 | 0.0 |

| 25 | DO6 | ~ | latent2 | 1.035638 | 0.610491 | 0.101301 | 10.223355 | 0.0 |

| 26 | DO7 | ~ | latent2 | 0.996247 | 0.571367 | 0.101729 | 9.793166 | 0.0 |

| 27 | DO8 | ~ | latent2 | 0.957089 | 0.598186 | 0.094842 | 10.09137 | 0.0 |

| 28 | DO9 | ~ | latent2 | 1.040693 | 0.604591 | 0.102426 | 10.160443 | 0.0 |

| 29 | DO10 | ~ | latent2 | 1.177656 | 0.670289 | 0.108812 | 10.822828 | 0.0 |

| 30 | AD1 | ~ | latent3 | 1.000000 | 0.478837 | - | - | - |

| 31 | AD2 | ~ | latent3 | 0.596162 | 0.342152 | 0.094078 | 6.336898 | 0.0 |

| 32 | AD3 | ~ | latent3 | 0.437053 | 0.268654 | 0.084017 | 5.201967 | 0.0 |

| 33 | AD4 | ~ | latent3 | 0.769467 | 0.427006 | 0.103145 | 7.460015 | 0.0 |

| 34 | AD5 | ~ | latent3 | -1.349486 | -0.698710 | 0.13627 | -9.902998 | 0.0 |

| 35 | AD6 | ~ | latent3 | -1.606016 | -0.819433 | 0.152228 | -10.550087 | 0.0 |

| 36 | AD7 | ~ | latent3 | -1.390536 | -0.778319 | 0.134248 | -10.357974 | 0.0 |

| 37 | AD8 | ~ | latent3 | -1.274979 | -0.643348 | 0.133857 | -9.524967 | 0.0 |

| 38 | AD9 | ~ | latent3 | -0.792595 | -0.407009 | 0.109885 | -7.212967 | 0.0 |

| 39 | AD10 | ~ | latent3 | -1.226555 | -0.634494 | 0.129663 | -9.459547 | 0.0 |

These seem to match the pattern seen in the loadings, broadly, but our model fit suggests this is not good. As a final push, we could consider trying to reflect the loadings we saw from EFA more closely. From the loadings matrix we can actually discern which factor each question had the highest affinity with by using the .idxmax(axis='columns') command, like so:

loadings.idxmax(axis='columns')

Run that below and examine the output.

Show code cell source

# You answer here

loadings.idxmax(axis='columns')

AS1 0

AS2 0

AS3 1

AS4 0

AS5 1

AS6 1

AS7 2

AS8 2

AS9 2

AS10 2

SC1 0

SC2 0

SC3 0

SC4 0

SC5 0

SC6 1

SC7 2

SC8 1

SC9 1

SC10 2

AD1 1

AD2 0

AD3 1

AD4 1

AD5 2

AD6 2

AD7 2

AD8 2

AD9 2

AD10 2

DO1 1

DO2 1

DO3 1

DO4 1

DO5 1

DO6 1

DO7 1

DO8 1

DO9 1

DO10 1

dtype: int64

What is interesting here is that reveals a rather disparate pattern for the assertiveness scale. Some questions loading on factor 0 (the first) while others are more associated with the second factor, which was related to dominance. Consider AS3 - “automatically take charge”. Should we be surprised this is more closely associated with a different factor? We can try to recreate this by tying each question as shown above to a specific factor. This is a tricky process. See if you can build this model below and examnine its fit statistic. It will more closely resemble the EFA, and may emerge as a better model.

Show code cell source

# Your answer here

mdspec = """

latent1 =~ AS1 + AS2 + AS4 + SC1 + SC2 + SC3 + SC4 + SC5 + AD2

latent2 =~ DO1 + DO2 + DO3 + DO4 + DO5 + DO6 + DO7 + DO8 + DO9 + DO10 + AS3 + AS5 + AS6 + SC6 + SC8 + SC9 + AD1 + AD3 + AD4

latent3 =~ AS7 + AS8 + AS9 + AS10 + SC7 + SC10 + AD5 + AD6 + AD7 + AD8 + AD9 + AD10

"""

# Create model

model = sem.Model(mdspec)

# Fit it

model.fit(confirm)

# Fit statistics

sem.calc_stats(model)

| DoF | DoF Baseline | chi2 | chi2 p-value | chi2 Baseline | CFI | GFI | AGFI | NFI | TLI | RMSEA | AIC | BIC | LogLik | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Value | 737 | 780 | 4906.10706 | 0.0 | 9776.734335 | 0.536598 | 0.498185 | 0.468907 | 0.498185 | 0.509561 | 0.106154 | 146.492616 | 496.8016 | 9.753692 |

Amusingly, this is even worse. Despite our best efforts, we’re unable to create a solid, stable set of latent variables that underpin this collection of data. This is a common experience, and the field of psychology has numerous measurement issues to which poor latent factor models contribute. Nonetheless, EFA and CFA are incredibly powerful approaches but they need to be treated with a lot of circumspection.